5 things you should know before buying accessibility audit and accreditation services

Note on February 2021 update:

This blog has always been popular since I wrote it back in 2013. The original version was a little about accessibility audits, and a lot about accreditation.

But a lot has changed since then. So I felt like it was time for an update.

There are more and more companies out there in 2021 that could do you an accessibility audit: some with a great pedigree, others are new to the stage. Some are expensive. Others seem really cheap. So, do you get what you pay for? And what is worth paying for?

Here’s what you need to know in 2021, all based around the concept of the “total cost of ownership” of accessibility for you, in the situation you find yourself in:

- Why do you want an audit?

- Understanding you get what you pay for in an audit

- It’s not the cost of the audit that matters, it’s the round-trip “cost of ownership” that does

- It’s all about the journey, not the false-start

- What sort of accreditation is actually valuable in 2021?

1. Why do you want an audit?

The first thing to think about is why you want an audit.

In our experience, there are a few key reasons:

- You’re looking to assess your website or app’s accessibility to find any weaknesses so you can fix them all:

- This wins you peace of mind on compliance, and also real progress in making your site or app accessible to more people, which can deliver the benefits of more customers and lower customer-support costs.

- You need a comprehensive audit service that will support your product’s accessibility progress, rather than just paying for a minimal report.

- You want to assess the level of accessibility risk in your site or app, and do what’s necessary to meet minimum requirements:

- It could be that you are a software-as-a-service company with a new client asking about your service’s accessibility, so you want to show some movement towards accessibility compliance, but you don’t think it’s a business priority to do too much yet. You need a VPAT and/or Accessibility Statement to sell your product to them, based on enough of an accessibility audit to be able to fill out each to a minimal level of completeness.

- If you’re not going to fix more than the top 5 or 10 easiest and cheapest things to fix, you need a light-touch audit that focuses on your key user journeys, and on quick fixes and workarounds for the top issues found.

- But, be aware, that accessibility statements that are not kept up to date, and say you’ll fix things at some vague point, get less and less effective as a risk mitigator over time.

- Are you looking to assess the level of accessibility, but are not actually planning to do anything about it?

- It could be that you need to baseline the accessibility of a product that is old and few people use, or a product that you’re going to totally rebuild for a v2. If you aren’t going to fix things, or re-use the design or code used on the site, you may have little to learn from knowing where its accessibility was strong or weak.

- A minimal report is all you need for the record.

2. Understanding you get what you pay for in an audit

Here I’m going to let you into a bit of a secret.

The cost of an audit, like any testing, depends on how much your supplier tests, how deeply they test, how much documentation you want them to write, and how much quality you want them to bring to each of those aspects of the audit:

- Finding the issues:

- The cost of this depends on the number of pages you want audited, and the complexity of those pages. This is why audit suppliers will need to review your site to scope out the number of pages they need to audit. The only way to do an audit without this sort of scoping is to do something like our Live Audits, which are fixed length, based on your site or app’s 3 key user journeys.

- It also depends on how many devices, browsers and assistive technologies you’re testing against, which depends on the product and its audiences. This could be simple and inexpensive, for example if you are testing a intranet where you know all your staff will be using it on a PC running Windows 10, using only IE11, and the Jaws screen reader (because that’s what you provide to staff who are blind); or testing a mobile app which is only available on iOS. Or it could be complex and very expensive, if you’re testing a public website, which people could use on laptop (PC and Mac, with all the different browsers and multiple assistive technologies on each of those) or mobile (iOS and Android, with the different assistive technologies on each of those).

- Finally, the cost is also impacted by when you’re happy for the auditor to stop looking for examples of an accessibility issue (defect) when they’ve already found many. For example, at Hassell Inclusion, we often have 4 or 5 types of fail under each WCAG success criterion (checkpoint), each requiring a different type of fix. We document up to 3 or 4 instances of each one. Then, if you are going to fix issues, you can look for any others yourself.

- Analysis of the fails:

- The cost of this depends on the number of fails found, and whether standard ways of fixing them would work for your site or app (which takes very little time) or some issues are new ones which only show up with some assistive technologies or because you’ve used unusual coding patterns (which can take lots of time to understand).

- This is the first hint that the real cost of your audit actually depends on how good or bad the accessibility of your site or app is.

- Write-up of the fails into your audit report:

- The cost of the audit massively depends on how many issues the auditor needs to document. Even with a great report template to speed this up, this again depends on the number of issues they find, and the amount of information they provide in the report to help you fix things.

- If an audit finds very little wrong, it could take a few hours to write up a short report telling you that all is well. If your site or app fails most of WCAG, the report could go on for hundreds of pages, which could take weeks to write.

All accessibility auditors know that these are the factors that will dictate the cost of the audit. They’ll be able to control the number of pages to test (the scope) when giving you a quote for the audit. But they won’t be able to predict the number of fails they find. So they won’t know how much the audit will actually cost.

However, you want a fixed quote. So they’ll estimate the number of days they think it will take them. And then they will fit the audit into that number of days, under-reporting any difficulties they don’t have time to look into. So it’s useful to have discussed with your auditor if they should contact you to keep you informed during the audit of how its going.

There are three things to understand out of this:

- Auditors who have been auditing for a long time, are likely to be quicker at finding issues than those who are new to it, and more accurate at finding all issues.

- Organisations which have been auditing for a long time are likely to have a huge library of fixes that the auditor can quickly put into your report to suggest a fix for issues found in your site or app. Those who are new to the game would need to cut and paste from WCAG (which can be difficult to understand), or write their own (which might take lots of time).

- That goes double for any complex issues found that only occur in some assistive technologies (especially less known ones like Claroread, for people with dyslexia) or are not part of a “standard” fix. The best auditors, who have been auditing many types of product for years, will either have these fixes in their database from previous experience or can use that experience to find fixes for totally new issues quickly. They may ask you questions about the product’s target users, the systems/suppliers used to create the product, and how easily you can make changes, and use that information in making recommendations. If they have experience of how people with disabilities use assistive technologies in real life, they may also be able to suggest workarounds to solve problems at minimal cost, where fixes are difficult to make. Those new to auditing may really struggle.

These are the reasons we think it’s a good idea to find out:

- how long the organisation who is pitching you an audit has been auditing for, and

- how long the specific auditor(s) who will be doing your audit have been auditing for.

While an experienced auditor, working for an experienced accessibility company, is likely to command a higher day-rate, they will also get you a much better quality report, in fewer days.

You really do get what you pay for. To use a healthcare analogy:

- Cheap healthcare:

Patient: Doctor, my back hurts

Doctor: Here are some painkillers – bye

- Expensive healthcare:

Patient: Doctor my back hurts

Doctor: How long has it been hurting for? Maybe some physio would help? Let’s look to see what’s caused it… Maybe get a better chair at work to make sure it doesn’t come back?

So, if you care about being able to fix things found in the audit, it’s worth buying quality.

If you aren’t going to make fixes, you may be able to get away with cheap.

Which brings me to my key point…

3. It’s not the cost of the audit that matters, it’s the round-trip “cost of ownership” that does

I’m guessing that you, like me, have bought many printers in your time. They rarely last more than a few years, and seem to have been made to become obsolete. Which is the exact point.

The key thing when you’re buying a printer, if you’ve read any good reviews, is: the total cost of ownership.

The cost of the printer to you isn’t that initial loss-leader cost – it’s that cost, plus the cost of running that printer until you replace it. And that’s dependent on how many pages (of black and white or colour printing) that you expect to do each year, and how much the toner/drum/ink cartridges will cost to do that printing.

Some printers (usually inkjets) are very cheap to begin with, then sting you in the cost of the ink. Whereas others (usually laser printers) initially seem expensive, but then the toner and drums last for so much longer that the total running cost per page is much cheaper.

It’s the same with accessibility audits.

At Hassell Inclusion we believe in minimising the total cost of accessibility, not minimising the cost of an audit:

- Spending less money on an audit to find less of the problems in your product isn’t good sense if the goal is to fix things.

- Spending less money on an audit is good sense when you found all of the issues that you could find yourself beforehand because you’ve already embedded accessibility testing in your process. If you have done this, you should expect lower audit costs from your supplier (see our case study for how we did this for Scope, to award-winning effect).

However, if you still only have budget for a cheaper audit, we’d suggest that you concentrate the audit on the key user journey on your site, via our Live Audits, rather than going to an agency that appears cheaper, and say they can look at the whole of your website, but actually don’t have time to be able to document all of the issues that they find.

Why? Because a Live Audit highlights key issues, helps you add them into your bug tracking system so you can find them on other pages, and most importantly means you are making progress towards fixing things to make your site more accessible to more people, while teaching you things to stop you making the same mistakes next time.

You need to look at the cost of the audit as the down-payment on the costs of the rest of the journey, not the sole payment.

4. It’s about the journey, not the false-start

An accessibility audit starts you on a journey. And how far you go on that journey depends on what you want to get out of it. Is it just to assess your risk? To mitigate your risk? To help people actually use your service and get some benefits to your business? Or to prove to your customers that they should remain loyal to you because you’re actually going to continue making what you do work?

The choice is yours.

If you just want the piece of paper saying how bad your site’s accessibility is, without wishing to improve it, then spend as little as you can on it, because in reality it’s worth very little.

But if you want to come on the journey to where the real value is, then pick an accessibility company who can take you all the way on that journey, taking time to understand your user journeys and customer touch points, and are able to do it to minimise your total cost of ownership.

We’re not the only company out there who can do that, but I would caution you against choosing companies that “dabble” in accessibility. The demand for accessibility audits in 2021 is high, so we welcome other companies in the accessibility audit market, as long as they provide a good service, with auditors who are well trained and able to audit well.

However, as many organisations found in 2020, choosing companies solely based on price may sound like a good idea, but often turns out to be a false start. We have many clients who’ve come to us having learnt that the hard way – having been delivered audits that were rushed, lacked detail, didn’t take their user-base or customer journeys into account, and just weren’t good enough – and now need help to figure out what to do next.

We’re not a usability company who do a bit of accessibility testing on the side. Or a digital agency who do accessibility audits because we’ve done a few for our own products. We’re a company who can not only give you a great audit, but also help you fix the deep technical issues in your product. We are a company that can help you change the way you do your design and development, so that you don’t have so many problems next time. We’re a company who can train you to test your own accessibility as you create products, so we can charge you less for audits just before launch because we’ll know there will only be minor issues in them to report on.

We’re a company who can help you get good at accessibility. So you get the benefits of accessibility, not just the costs.

If that’s what you want, come to us.

As the CEO of Galaxkey says:

“The advantage of working with Hassell Inclusion was their executives understand the software development process. This made the entire experience seamless for our team to understand and then implement. The last thing any software product company needs is to redesign everything. But the solutions provided by Hassell were easy to implement and super practical.”

5. What sort of accreditation is actually valuable in 2021?

Finally, we come back to Accreditation, which was my key point back in 2013.

A lot of people want to get some sort of accreditation for having done an audit, and fixed a lot of the stuff found. It’s not been easy, so they want some sort of badge, to make all that work worth it.

That’s understandable. But that’s not the thing that you need. Because the accreditation is of one ‘fixed’ product at one point in time – that on one day, that one site/app was compliant.

That’s a major achievement. But, I’m afraid, it’s not the hardest part of accessibility.

Keeping your site or app accessible/compliant is the hardest part. Because it can have two new pages added tomorrow that are both inaccessible and then you’re back in trouble again.

Ideally, what you want is proof not that your website on a single day is accessible, but proof that your company has actually got the processes in place to keep it accessible, all of the time. And to not just keep your website or app accessible, but all the other digital things you own as well (like your social media and your intranet).

That’s why people rarely talk now about the accessibility badges I talked about in 2013. And why they are talking instead about how to get accredited against organisational accessibility standards like ISO 30071-1.

Check out our article on How to make accessibility a habit in your organisation rather than an add-on, and you’ll see what accreditation looks like in 2021.

Need help?

If you need any help in deciding what accessibility audit is right for your organisation, please contact us, and we’ll be delighted to help you.

Want more?

If this blog has been useful, you might like to sign-up for the Hassell Inclusion newsletter to get more insights like this in your email every other week.

The original January 2013 blog:

From WCAG 2.0 AA and Section 508 VPATs to RNIB/AbilityNet Surf Right, DAC and Shaw Trust accreditation, there are a lot of accessibility conformance badges out there.

So how do you know which badge to pick? What is the actual value of these badges to the organisations that buy them, and to the disabled people who use their sites?

These are questions I get regularly asked by clients, so here’s a guide for how to choose the best accessibility accreditation for your website.

1. Why is there more than one accreditation badge?

Yes, there are lots of badges to choose from.

Yes, there are lots of badges to choose from.

While WCAG 2.0’s level A, AA and AAA badges are close to a de-facto Standard for accreditation, many organisations that carry out accessibility audits soon become aware of the limitations of WCAG’s conformance levels and come up with their own-brand accreditation badges as a value-add for clients, based on their own experience.

In the UK most major organisations that offer accessibility audits have their own badge: DAC’s Accreditation, Shaw Trust’s Accreditation, RNIB See It Right and Surf Right badges and RNIB logo standard with UseAbility.

Accreditation in the United States happens somewhat differently, with VPAT certificates of compliance with Section 508 guidelines often the result of accessibility audits.

And, in December 2012, the Hong Kong Office of the Government Chief Information Officer (OGCIO) launched a Web Accessibility Recognition scheme, which encourages local businesses, NGOs and academia to apply for a free accessibility audit and award of accessibility accreditation badges at new Gold and Silver levels that they have defined.

Unless harmonization occurs in the accessibility audit market, there’s likely to be more, not less, options for accessibility accreditation in the future.

So, when you’re choosing an organisation to audit and accredit your site, ask them the strengths and limitations of the benefits that their badge will bring to you, as well as the costs. And choose the badge that gives you your preferred balance of costs and benefits.

While costs are easy to assess, benefits are harder. You’ll need to consider two dimensions of benefit:

- value to you – the company owning the website; and

- value to the disabled and elderly users of your website

2. Does the badge bring value to the company owning the website?

Accessibility conformance badges make organisations feel more secure about accessibility.

Accessibility conformance badges make organisations feel more secure about accessibility.

Chances are you’re interested in a badge because you feel it gives you some sort of external, independent proof and public recognition that you’ve achieved a particular level of accessibility.

If you’re investing in an accessibility audit, you might as well pay a bit more for a badge that summarises the results of that audit in a simple way you can share with your users.

All such badges promise this, other than the WCAG 2.0 conformance badges and Section 508 VPATs whose value has tended to become questioned because, unlike other badges, you can award them to your own products, rather than have to pay someone else to do the testing and accreditation for you.

This sense of security is valuable, especially for your organisation’s reputation management and PR. Displaying the results of having tested against a set of accessibility metrics tends to prove your statements declaring the website’s commitment to accessibility have actually achieved good results that have been independently verified by an reputable auditor.

But part of this sense of security is also potentially misplaced. Having a badge doesn’t necessarily mean your site is usable by disabled people. It won’t guarantee you won’t get sued under discrimination legislation, or save you from having to deal with accessibility complaints from disabled users via email, twitter or Facebook.

So, when you’re choosing an organisation to audit and accredit your site, ask for details on how their audit and badge is going to give you the level of accessibility security that you desire (and are willing to pay for).

3. Does the badge bring value to the user using the website?

It’s more important for a website to be usable and an experience that users would want to repeat than to have a badge put on it.

It’s more important for a website to be usable and an experience that users would want to repeat than to have a badge put on it.

In my experience, once the user has arrived at a website they will work out pretty quickly whether the site is accessible to their needs.

The presence of a badge on the site doesn’t really help. On the contrary, if a person with a disability can’t use your website, but sees a badge on it, that person is likely to become confused and annoyed – they know they aren’t getting a good experience, but some organisation out there that claims to speak on their behalf has said they should be.

What is useful is the testimonial aspect of the badge. To give an example: if I were a person with a disability in Hong Kong and I was looking for an online retailer, what would really help me would be the equivalent of a price comparison website that informed me of the accessibility badges that various competitor retailers had achieved. I’d then be able to see which was the most accessible, and use that information to help me decide which websites I would want to visit.

Accessibility badges are more like a Which? Product Review or Kitemark – the mark is of little value when you’ve already bought the product (or, in this case, visited the website); you need it when you are choosing which of the products that are available (websites to visit) will be most suitable for your particular set of needs and preferences.

So, when you’re choosing an organisation to audit and accredit your site, ask if they will add a link to your site to a directory of sites they have accredited and ask how well they promote that directory.

If the Hong Kong government wishes to “show appreciation to businesses and organizations for making their websites accessible” they should create an accessible directory of the sites they accredit, as this would be a useful guide for the disabled people the government wishes to help, and will help drive disabled people to the sites that have done the work to gain the ‘Gold’ level.

After all, ‘gaining more users’ is a much more tangible reward than ‘appreciation’.

Of course, the creation of one directory that amalgamated details of all accredited websites in each country, whichever accreditation badge they achieved, would be even more useful for users and organisations.

Such a directory wouldn’t be difficult to create, and – based on the limited number of organisations that have taken the trouble to get their websites accredited so far – wouldn’t currently be that difficult to maintain.

However, the value of such an amalgamated directory to users is constrained by one complicating factor – with so many different badges being used, will users be able to work out what each badge means?

4. What does the badge actually mean? – what were the testing metrics

The most important ‘meaning’ of a badge is the testing that it summarises. Its value is totally dependent on the value of the metrics being tested against.

The most important ‘meaning’ of a badge is the testing that it summarises. Its value is totally dependent on the value of the metrics being tested against.

Different badges differ in the checkpoints (usually known as ‘success criteria’) that sites are tested against:

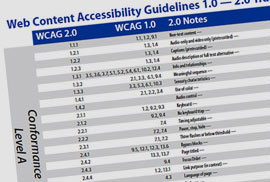

- WCAG 2.0 A, AA and AAA badges are awarded for conformance with WCAG 2.0’s success criteria, which are allocated to different conformance levels.

- Many other badges are effectively critiques of the relative importance of the various success criteria that make up the different WCAG 2.0 levels, and WCAG’s insistence that sites achieve all success criteria at a given level. For example: the Hong Kong Scheme’s Gold badge includes a mixture of some WCAG 2.0 AA and some WCAG 2.0 AAA checkpoints. This is no doubt based – as are the metrics behind other badges – on the views and experience of those who set up the scheme on which checkpoints really matter to disabled people, and which may be too costly to implement in practice.

Secondly, badges differ in whether testing is done solely by experts, or includes some testing by disabled users:

- Some badges just test the technical accessibility of sites – such as general WCAG 2.0 audits and RNIB See It Right and Surf Right badges. However, as any accessibility specialist worth their salt, and recent academic research, can tell you, WCAG 2.0 audits can miss numerous problems that disabled people experience with web pages.

- Thankfully, an increasing number of badges – such as JISC TechDis Accessibility Passport, DAC’s Accreditation, Shaw Trust’s Accreditation and RNIB’s logo standard with UseAbility – also include user-testing by people with disabilities, to test whether or not good technical accessibility results in usable experiences for disabled and elderly people.

So, when you’re choosing an organisation to audit and accredit your site, ask what success criteria they will audit against, and whether they will include any user-testing with people with disabilities.

5. What does the badge actually mean? – transparency and ease of understanding

The second important aspect of the ‘meaning’ of a badge is whether people with disabilities can easily understand if the badge will predict whether a site will work for their particular needs.

The second important aspect of the ‘meaning’ of a badge is whether people with disabilities can easily understand if the badge will predict whether a site will work for their particular needs.

While individuals may be interested in how well a website meets the needs of all people with disabilities, they are much more likely to be interested in whether it will meet their own particular needs.

And this is where almost all badges fall down.

Different groups of disabled people have very many different needs from each other. So, ideally, a useful badge would indicate clearly whether a site is suitable for a particular group’s needs.

Unfortunately, no badge that I know of does this, other than JISC TechDis’ accessibility passport for eLearning resources, which includes information on how accessible a resource is for people with different types of disability.

WCAG 2.0 conformance levels and all other accessibility badges mix up the success criteria that different disabled groups are particularly sensitive to. This means disabled people need to understand which success criteria are particularly important to their needs, and then work out what level each accreditation scheme assigns those success criteria to.

This means it is essential for accessibility accreditation badges to be transparent about what they mean.

DAC’s clickable accreditation badges (for example: see the DAC accredited badge at the bottom of the WY Metro Accessibility page) that link to a Certificate of Accreditation explaining what was tested, and when, is a good start.

Ideally such certificates would also include more details of the success criteria tested, with any deficiencies in the site’s accessibility – arranged by disability/impairment – clearly and simply highlighted, as recommended in BS 8878’s advice on How to Write an Effective Accessibility Statement.

So, when you’re choosing an organisation to audit and accredit your site, ask what mechanisms they will provide for disabled people to easily understand how the badge will predict whether a site will work for their particular needs.

Final thoughts – will the Hong Kong scheme impact accreditation more widely?

The Hong Kong government scheme is a bold and interesting initiative, and could be a great chance for its web industry to move forwards in its understanding and implementation of accessibility and inclusive design.

It will be useful in the future to see what the results of the scheme are:

- Whether free audit and accreditation results in more accessible sites in Hong Kong.

- Whether there are lessons that can be shared with other governments considering doing something similar.

- What impact (if any) it has on other organisations that provide accreditation services.

I’ll certainly be tracking what information they release later this year.

Comments

James says

Organisations should also remember that any day after getting their badge they can easily do something to their site which invalidates it.

Jonathan Hassell says

Good point, James.

One of the biggest risks for the accessibility of a website is when you pass your new site over from the team creating it to the team maintaining it.

This is why accreditations should always come with a time period in which they are valid.

I think DAC’s accreditation certificates last a year, after which a refreshed audit is needed. which seems about right. Too short a period sounds like a license for the agency providing the certificate to print money. Too long a period is likely to damage the reputation of the badge as a site’s accessibility does tend to degrade over time and maintenance.

Sarah Bourne says

Just a brief clarification: The VPAT, used in the US, is a statement of compliance rather than a certificate. While some companies have third parties conduct audits and create their VPATs, many more just complete their own, with varying knowledge about accessibility. While you can generally accept any defects listed as being true, it is not wise to assume that assertions of compliance are true.

Jonathan Hassell says

Thanks for this, Sarah.

Yes, the VPAT is officially a statement rather than a certificate, just like WCAG 2.0 conformance badges.

As you say, another problem with badges and statements is that you don’t know whether you can trust them.

A VPAT or WCAG 2.0 conformance badge could have been created by the website owners without the proper understanding of the standards they are checking against. Or they could have been created as the result of an experienced third-party doing an audit.

While it’s relatively easy to see if a VPAT looks reliable, because you can see some of the assessment work that went into its production, it’s impossible to tell from looking at a WCAG 2.0 conformance badge on a website whether you can trust it.

That’s another reason why audit agencies have come up with their own accreditation badges – so that sites awarded the badge can let users know that a named, trusted agency has done the accreditation, not the website owners themselves.

Ryan Benson says

I would suggest NOT making this claim: “Yes, the VPAT is officially a statement rather than a certificate, just like WCAG 2.0 conformance badges.” A VPAT, in the US Federal Government sphere is the thing that has any weight. If anybody says this site/app is compliant simply because they have a WCAG 2.0 conformance badge, most federal agencies would say “Ok that is nice, but give me a VPAT.” Why? The US Department of Justice won’t care if you have a WCAG and RNIB, they require a VPAT.

Some agencies have guidance on how they require a VPAT to be filled out, such as HHS (http://www.hhs.gov/od/vendors) and SSA (http://www.ssa.gov/accessibility/contractor_resources.html). These “customized” VPATs help companies know what they want to see and weed out when people do a VPAT in 5 minutes versus actually evaluating their product.

Jonathan Hassell says

Hi Ryan,

Where they have any regulations on accessibility of publicly procured solutions, the governments of different nations currently require different documents to ‘prove accessibility’.

The VPAT is the ‘price of entry’ to selling IT solutions to US Federal Government.

And it looks like WCAG 2.0 AA compliance will be the ‘price of entry’ to selling IT solutions to EU governments, if the European Commission’s proposed directive on “Accessibility of Public Sector Bodies’ Websites” is approved.

But this is situation is likely to change – as the EC directive is trying to harmonise EU nations regulations around WCAG 2.0 AA, the work on refreshing Section 508 is also focusing on WCAG 2.0 AA, and there has been much discussion between the European Commission and the US Access Board on such harmonisation…

Sveta says

I feel that putting a badge on the website does not guarantee it’s 100% accessible. Especially if a website is maintained via CMS by people not well familiar with accessibility who may create a new content that is not accessible. I did come across some websites claiming to meet the SC AAA, but in reality they barely meet the SC A!

Jonathan Hassell says

Thanks, Sveta.

Yes – as I discuss with James below – maintenance is a real limitation to the reliability of a certification over time.

Moreover, I don’t believe it’s possible for a website to be “100% accessible”. Even for WCAG 2.0 AAA sites, there will likely be usability problems that WCAG 2.0 didn’t cover. And there will always be someone with a rare condition, or combination of conditions, that will still have difficulties using the most accessible sites because the guidelines don’t cover their particular needs. This is what website accessibility personalisation is designed to handle (see GPII).

The important thing is for site owners to know what the most common barriers are, and how large the groups are that are affected by those barriers. That’s the most pragmatic place accessibility work should start. And then organisations should move on from there to issues that affect smaller groups.

Steve Green says

Unfortunately there is commercial pressure on companies that offer certifications, and I have evidence of them certifying websites that do not meet the standard they say they do.

Last year a large organisation approached us requesting a certification because their current one (from one of the organisations you mention above) was about to expire. They gave us that organisation’s test report, which had identified many serious issues that were supposed to be fixed prior to certifying the website. However, the issues were not fixed and the certification had been granted anyway.

In the end we did a lot of technical testing and user testing, reported many serious issues that should be fixed, but declined to offer any kind of certification because the client would not fix some of those serious issues.

We still plan to launch a certification this year once we have formalised a pragmatic approach to some of the difficult issues. You have discussed some of these, such as legacy content, in other blogs.

There is also the issue that websites serve many purposes – the one I mentioned above had more than 200,000 pages! Most features of such a website may be accessible to people with certain disabilities, while certain other features are not. Depending on what a person wants to do on the site, it may be perfectly accessible or totally inaccessible to them. How does certification address that, especially when the inaccessible content is old and/or not commonly accessed? A single certification level for a whole site is clearly not sufficient, yet what is the alternative?

Jonathan Hassell says

Thanks for this really useful and important perspective, Steve.

How agencies that provide certification deal with the commercial pressure of sites leaning on them to release certification for sites that don’t yet deserve that certification is key to how reliable that certification is.

While I can see the reasons why a website owner might put pressure on an agency that is testing and accrediting their site (the legal risk aspects of accessibility often make site owners anxious to get any certification on their site quick so they feel they’ve done something) I think this is counter-productive for both website owner and accreditation agency:

So, while I know it may cause friction with some of your clients, I hope your decision to stick to your guns and decline to offer certification when client’s sites don’t deserve it will serve you well over time.

The ‘formalised pragmatic approach’ you speak of – that understands legacy content, the difficulties of ensuring accessibility is maintained over time etc. – is something I touch on in my WCAG Future blog.

Felix Miata says

Many users of higher than average pixel density devices and/or those with below average vision, must be confused if they see badges on sites styled with such CSS as:

… {font-size: 16px;}

… {font-size: 15px;}

… {font-size:14px;line-height: 1.55em;}

… {font-size:14px!important}

Such micro managed font sizing makes a user stylesheet all but worthless as a means to override site styles designed, intentionally or otherwise, to defeat user personalization on their personal computing devices. Many can’t read text so styled without zooming first, and must wonder who it is that is missing something important about the subject of accessibility.

Because I know that effective pixel densities vary widely, and that styling text in px totally disregards the visitor’s optimum text size, my own response is that sites that size text in px are missing something fundamental about the meaning of accessibility, and are thus worthless as authorities to cite, regardless of their actual content, or the badges they display.

Absent a compelling reference from elsewhere, my initial response when I arrive on sites that disregard my browser personalization is to click the back button, badge or no badge.

Jonathan Hassell says

Hi Felix.

Thanks for giving your great example of the main point of my blog: it doesn’t matter if the site has an accessibility badge on it; if the site doesn’t work for your visitors, they’ll leave it.

John Foliot says

Sadly, I believe that the true value of these types of badges is something close to nothing – a lesson I thought we learned many years ago.

I say this because these types of badges provide a false sense of “compliance” while at the same time (once again) distilling accessibility down to nothing but a checklist of does and don’ts, with little to no thought applied in the process.

Most modern web content today is dynamic, rather than static, which also means that most modern content is changing at various rates of speed, and a ‘badge’ at best confirms a snapshot of compliance at the time of the evaluation, which could have been yesterday, a week ago, or 7 years ago: the end user has no idea, and the value of the badge is meaningless to most users anyway.

About the only redeeming feature of a badge is in raising awareness amongst developers, which has some limited positive value; however those developers who are going to care are not going to seek out a badge (what a dated concept anyway), but rather do the heavy lifting and work towards results. I mean, if all you really want is a badge, insert this into your source-code:

If I were a troll seeking to make an example of a site that was “breaking” their “accessibility promise” the first sites I would seek out are those with these types of badges, as they are now public claims of something that I suspect they will not be able to maintain. (Good thing I’m not a troll…)

Cheers!

JF

Jonathan Hassell says

Great to have your perspective on this, John.

I totally agree, as does James below, that the maintenance of sites – especially with modern dynamic sites – is a real challenge to the value of badges.

However, some badges have shown some understanding of this challenge – for example: the DAC accreditation certificate, which is visible to users, includes the date on which it was given, and the date on which it expires.

Obviously, inaccessible content could still be uploaded to the site the day after the accreditation was given, lessening the value of the badge. But I don’t think that negates the value of the badge.

I think we’d all agree that the accessibility of a website is partly down to (1) its design and technical underpinning (the structure, page templates and content management system (CMS) that new content is created in); and partly down to (2) the content that is then added to the site using that CMS.

So, while ‘snapshot’ accessibility assessment and accreditation cannot cover new content, it definitely covers the accessibility of that design and technical underpinning, which applies to every single bit of content on the site. Moreover, you can imagine a more comprehensive badge (I don’t know if this exists yet) that includes assessment of this 2nd aspect: how well the organisation has trained the staff who maintain its site, and whether the CMS they use enables/requires new content to be accessible before publication (including whether the CMS is ATAG compliant).

So, while I agree that sites that include badges in their ‘accessibility promise’ may often have missed doing the necessary work to ensure they keep their site at the level they’ve achieved, I think it’s important to recognise that these sites have at least made an assessment at some point as to whether they site actually lives up to that promise.

If I were a troll (which I’m not), the sites I would seek out would be those that included no accessibility statement, or those whose accessibility statement was obviously cut and pasted from another site.

Jake says

Thanks for this blog entry Jonathan. I have been a System Access user for a little while, and also use NVDA. One of the features of System Access–as some of you may know–is CSAW. This stands for Community Supported Accessible Web, and is a feature that allows System Access users to label links and buttons that are not labeled, thereby making inaccessible websites accessible to other System Access users. My question is this. If this feature worked not only for System Access but for other screen readers as well, could it perhaps be considered a valid way of evaluating websites for screen reader accessibility? I’m in no way trying to criticize Serotek here. In fact this is an amazing feature of their screen reader, and it’s among the reasons I chose to switch over to System Access from another screen reader. I hope all of this makes sense. I’m just barely getting my feet wet with regards to all these web standards and such

Jonathan Hassell says

Thanks for this useful idea, Jake.

I’ve not used CSAW myself, but it sounds like it’s an interesting feature.

As there’s a lot more to screenreader accessibility than labelling unlabelled links and links, I think CSAW on its own wouldn’t be enough to evaluate websites for screenreader accessibility. Moreover, accessibility is much more than just screenreader accessibility, as it encompasses the needs of people who use switches, speech-recognition, large fonts, alternative colour schemes, captions, screen magnification etc. etc. etc.

That said, CSAW might be an interesting tool for those performing accessibility audits to include in their audit methods. If CSAW users have already added labels to some aspects of the site, that’s already an indication that the site itself is failing to meet the needs of some of its users.

Cam Nicholl says

I quite agree with James. This is why it is so important that the accessibility services providers help each and every one of their clients embed accessibility into their processes. There are various ways to do this. In no particular order, these are methods we have found to be successful:

1. Inviting clients to spend time with the user testers during the audit of their site. This is quick and free for the client and an excellent way to show clients first hand how users of AT interact with their site.

2. Provide a captioned video of portions of the user testing sessions and request that clients upload it to their intranet and point those concerned with the daily upkeep to the video. As an extension to this and as part of an accessibility policy, staff should ideally confirm that they have watched and understood the implications or they have watched and now have questions. Any good accessibility provider should be happy to provide answers to their questions without charge. This method is useful for those larger organisations with greater volumes of staff churn.

3. Structured training sessions for staff is a yet another option. We find the most impactful training sessions we deliver all include a short presentation by one of our test team, using their assistive technology to highlight commonly found issues on the client site and the barriers that they present to that user. The rest of the training is tailored to previously identified skills gaps in order to quickly encourage engagement and to maximize the effectiveness of the session.

4. Make clients aware of BS8878 and other relevant standards and guidelines, which is where I will stop and let Jonathan pick up from here……

Jonathan Hassell says

Totally agree with you, Cam.

This embedding of accessibility awareness, motivation and skill is exactly what I do for all my clients too.

In general, I find people with different job-roles require different training sessions – coders need very different training from designers, who also need very different training from content creators whose job it is to ensure that the content (text, images, audio, video) they produce and add to the site through its content management system is as accessible as they can make it in the time they have.

This full embedding of accessibility throughout a team is what BS 8878 was created to guide – readers can find more on it at: Building accessibility strategy into the culture of an organization.

Iza Bartosiewicz says

Rather than putting a badge on the end product, which will break as soon as someone forgets to add alt attribute for an image, perhaps it would be more useful to award badges to organisations that can demonstrate a genuine commitment to accessibility (that is, they’ve embedded it in all relevant processes and activities, such as purchasing, development, design, content creation, testing, training and hiring…). This would allow us to identify organisations with a deep understanding and practical approach to accessibility that goes beyond ticking the compliance boxes.

Such a badge could give us greater certainty that the products (whatever they may be) are as accessible as possible – and will remain that way – because they were developed by an organisation that considers accessibility as an integral part of everything they do and produce, rather than an add-on (or ‘a feature’).

Jonathan Hassell says

I couldn’t agree more, Iza.

And the basis for such a ‘commitment to accessibility’ badge already exists.

Here in the UK, we have a useful way for organisations to sign up to such a commitment – see the BTAT Charter, of which Hassell Inclusion is a signatory.

And we also have the BS 8878 code of practice that provides a framework that enables organisations to live up to their commitment, by guiding them in what’s necessary to do the sort of embedding of accessibility ‘into all relevant process and activities’ that you talk about.

While BS 8878 doesn’t include the idea of a badge, compliance with BS 8878 is demonstrated through providing evidence of that embedding, on an organisational and product level.

You can find more free information on BS 8878 at https://www.hassellinclusion.com/bs8878/

It’s well worth a look if you haven’t already.

And I’d love to hear what you think of it.

John Foliot says

I still believe that a “badge” does little more than provide a feel-good sense of security that is likely out of step with reality. I personally think that companies that are handing out such badges (sorry Steve) are also opening themselves up to inclusion if and when a legal challenge arises: that to me, as a business owner, would be a primary reason to display a badge – it shifts blame away from them, and over to the “testers and accreditors”. If Susan Brown is looking to challenge a company, that company can shift culpability to the firm that provided the ‘accreditation’ (badge).

To be clear, I fully believe that there *is* a role for third party evaluation firms, who come with requisite expertise and a fresh approach to evaluation unencumbered by internal politics, and I commend those companies that seek to gain that kind of insight. But a badge? They are nothing more than marketing bits from the evaluation firms (in an effort to seek more business) that rarely if ever have any real correlation to the accessibility or lack there-of of any site.

Jonathan Hassell says

Interesting thoughts, John. It would be interesting to know whether US law might support the culpability of accrediting organisations in legal cases. I don’t think it would in the UK…

I agree that current badges often confuse as much as enlighten. Have you checked out Jisc’s Accessibility Passport? – it was definitely an attempt to go in that enlightened direction…

Sean says

I really enjoyed the idea of these badges becoming more of a “meets this/these disability need(s)”. I think you could still have the levels (A, AA or AAA) but this would certainly make the whole accessibility issue much simpler to deal with for all developers. Standards are only standards if they are widely accepted and implemented. For many developers, implementing standards in this manner could be mostly automated within a CMS or other dynamic content systems. For hand coding being able to focus on meeting certain disability needs makes life simpler and clear to understand the value of those changes.

Rosalind Moffitt says

An old post, but lots of good points, and much is true of language accessibility. We can’t say language is plain, easy or clear, because it is for the recipient to decide that, and everyone is different. Hence we aim to make information ‘easier’.

Easy Read in particular is misleading, for several reasons, one of which is that it assumes that people with acquired disabilities have the same needs and abilities as people with developmental disabilities. And of course everyone is different anyway, so you can never give an absolute assurance of accessibility. But you can follow best practice.

Your point about transparency is also applicable to language adaptation, I have seen many documents that have involved co-creation and user testing which are very poor quality. There is no transparency about what has been tested, or how. Our Accessible Information Ladder gives a structure for creation, delivery and evaluation of accessible information.

You may also be interested in Sense Making Optimisation – it’s no good finding information (SEO) if you can’t understand it. SMO maximises (optimises) understanding (making sense), for all audiences and media channels.

Jonathan Hassell says

Thanks for your comment, Rosalind. I’ll be sure to check out your resources.

Jonathan